Bootstrapping

What is Bootstrapping?

Bootstrapping is a resampling strategy that has become progressively well-known in statistics and data science because of its capacity to give precise estimates of parameters without making assumptions about the underlying population distribution.

The method includes producing new samples from the accessible data by resampling with replacement and utilizing these samples to estimate the parameter of interest or perform hypothesis testing.

The idea traces back to the nineteenth 100 years when French mathematician Pierre-Simon Laplace proposed the strategy for creating random samples from a known distribution to estimate the probability of rare occasions.

Be that as it may, its advanced rendition was first proposed by Bradley Efron in the last part of the 1970s.

From that point forward, this has become an amazing asset for specialists and experts looking to acquire further knowledge of their data and settle on more educated choices.

One of the vital benefits of this is its flexibility. Conventional techniques for estimation frequently depend on assumptions about the underlying population distribution, which may not be precise, practically speaking.

Bootstrapping, then again, requires no assumptions about the population distribution and can be utilized to estimate many parameters, including the mean, standard deviation, variance, and correlation coefficient.

This makes it an integral asset for handling intricate data sets and resolving genuine issues.

Notwithstanding its flexibility, it is somewhat simple and can be performed utilizing standard statistical packages like R or Python. This has opened the procedure to many scientists and specialists, from fledgling statisticians to experienced data analysts.

Regardless of its many benefits, it has its limits. The procedure can be computationally intensive, particularly while managing enormous data sets or complex models.

Also, it may be delicate to outliers in the data, which can prompt off-base estimates.

With conscious thought and proper use, this stays a fundamental apparatus for statisticians and data analysts looking to acquire further experiences in their data and pursue more educated choices.

This article will investigate its fundamental principles, including various techniques, their applications, and a few certifiable instances of how taking care of perplexing issues in various fields can be utilized.

We will discuss some of its normal entanglements and constraints and give guidelines for its proper use.

Toward the finish of this article, readers will have a careful comprehension of the principles and their practical applications and have the option to apply this strong strategy to their own data analysis projects.

Key Takeaways

- Bootstrapping is a powerful statistical method that has become progressively well-known.

- It offers a few benefits over traditional statistical techniques, including non-parametric, distribution-free, helpful for small sample sizes, accuracy, and more accurate confidence intervals.

- Similar to any statistical procedure, this has its impediments, including computational intensity, possible bias, dependence, sampling variability, and assumptions of random sampling.

- Assessing the sampling distribution of the statistic of interest can give a more accurate estimate of the confidence intervals, which are basic for making statistical inferences.

- It is an adaptable method applied to many data types and issue spaces.

- Specialists and professionals ought to know about the assumptions and potential biases related to it and do whatever it may take to relieve these risks.

- This significant statistical strategy offers a few benefits over traditional statistical techniques.

- Giving more accurate estimates of the sampling distribution of the statistic of interest can assist specialists and experts with settling on additional educated choices in light of their data.

Types of Bootstrapping Strategies

There are a few types of its strategies, each with its novel benefits and hindrances. But first, this segment will examine its most widely recognized types and their applications.

1. Non-parametric

It is the most well-known type and is utilized to estimate the sampling distribution of a statistic without making any assumptions about the underlying population distribution.

This method includes randomly resampling the accessible data with replacement to produce various new datasets, each with a similar size as the first data.

The statistic of interest is then determined for each new dataset, and the distribution of these determined statistics is utilized to estimate the sampling distribution of the first statistic.

This is especially helpful while managing small sample sizes or when the underlying population distribution is still being determined.

2. Parametric

It is utilized when the underlying population distribution is known or can be estimated utilizing a parametric model.

In this procedure, the model parameters are estimated from the first data, and new datasets are created by resampling from the estimated distribution.

The statistic of interest is then determined for each new dataset, and the distribution of these determined statistics is utilized to estimate the sampling distribution of the first statistic.

NOTE

Parametric is especially valuable when the underlying distribution is known and can be precisely estimated.

3. Bootstrap Aggregating

Bootstrap aggregating, or bagging, is a strategy used to lessen the variance of a statistical model by producing numerous bootstrap samples and fitting a model to each sample.

The anticipated qualities from each model are joined to deliver a solitary, more precise forecast. This strategy is especially helpful while managing complex models, for example, decision trees or neural networks, where the variance of the model is high.

4. Jackknife

It is an exceptional instance of non-parametric bootstrapping that includes efficiently leaving out at least one observation from the data and recalculating the statistic of interest.

The interaction is repeated for every perception, and the distribution of the determined statistics is utilized to estimate the sampling distribution of the first statistic.

This is especially helpful while managing small sample sizes and when the statistic of interest is delicate to outliers.

It is a strong statistical strategy that can be utilized to estimate the sampling distribution of a statistic without making assumptions about the underlying population distribution.

NOTE

The bootstrapping strategy utilized relies upon the idea of the data and the main pressing concern. Careful thought should be given to the appropriate utilization of every procedure.

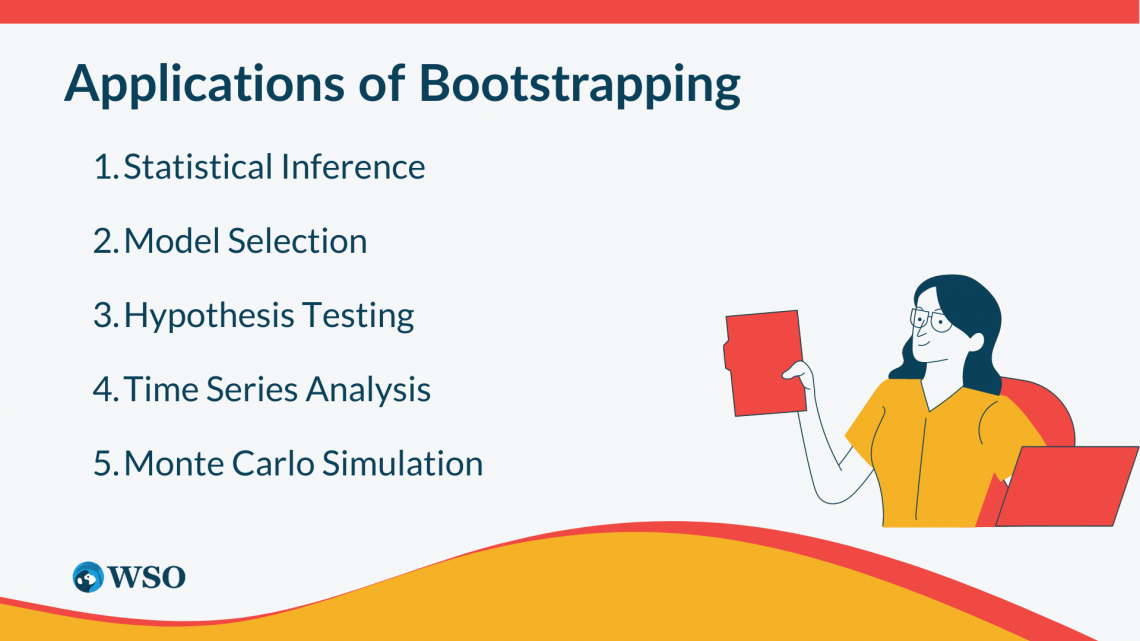

Applications of Bootstrapping

It has many applications in different fields, including finance, financial matters, engineering, science, and sociology. In this segment, we will examine a few normal applications.

1. Statistical Inference

One of the most widely recognized applications of this is in statistical inference, where it is utilized to estimate the sampling distribution of a statistic and compute its certainty interval.

This method is especially valuable while managing small sample sizes or when the underlying population distribution still needs to be discovered.

2. Model Selection

It can likewise be utilized for model selection, where assessing the expected error of a model and looking at the performance of various models is utilized.

This procedure is especially helpful while managing complex models, for example, decision trees or neural networks, where the variance of the model is high.

3. Hypothesis Testing

It can be utilized for hypothesis testing, where testing the meaning of a distinction between two groups or the correlation between two variables is utilized.

This procedure is especially helpful while managing non-normal data or when the assumptions of conventional hypothesis testing techniques must be considered.

4. Time Series Analysis

It can be utilized in time series analysis, where it is utilized to estimate the certainty intervals of the determined qualities and assess the precision of the forecasting model.

NOTE

This procedure is especially valuable while managing non-linear time series or when the underlying distribution of the data is unknown.

5. Monte Carlo Simulation

It can be utilized in Monte Carlo simulation, where producing random samples from a known distribution and estimating the probability of rare events is utilized.

This procedure is especially valuable in finance and engineering, where assessing an investment's risk or a system's unwavering quality is utilized.

This is a flexible method that can be utilized in many applications. It gives a useful asset to scientists and professionals trying to acquire further knowledge into their data and settle on additional educated choices.

To know why bootstrapping works, visit here.

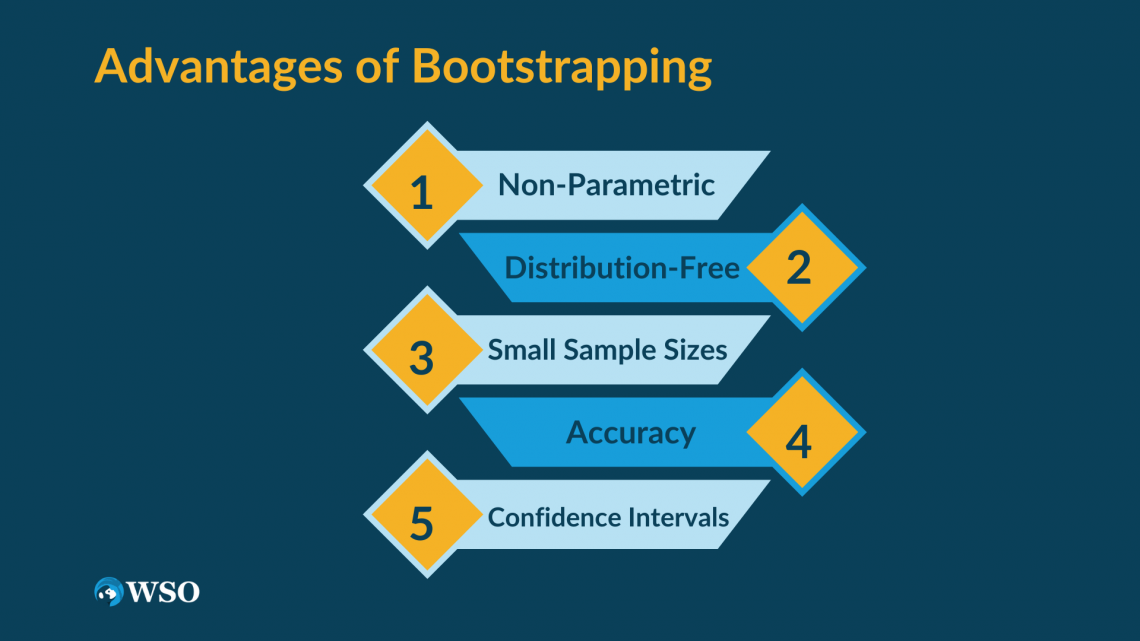

Advantages of Bootstrapping

It offers a few benefits over traditional statistical techniques. In this part, we will examine a portion of its vital benefits.

1. Non-Parametric

One of its most significant benefits of it is that it is a non-parametric method.

This implies that it makes no assumptions about the underlying population distribution, dissimilar to traditional statistical strategies, for example, the t-test, which expects a normal distribution.

This makes this a more strong procedure, especially while managing non-normal data.

2. Distribution-Free

One more benefit of it is that it is a distribution-free procedure. This implies that it can include information on the specific distribution of the statistic of interest.

It estimates the statistic's sampling distribution by repeatedly resampling from the accessible data. This makes it a more adaptable procedure that can be applied to many data types and issue spaces.

3. Small Sample Sizes

Managing small sample sizes is especially valuable. Traditional statistical techniques require an enormous sample size to estimate the sampling distribution of the statistic of interest accurately.

NOTE

Interestingly, it can create different samples of similar size as the first data, considering a more accurate estimation of the sampling distribution.

4. Accuracy

It gives a more accurate estimate of the sampling distribution of the statistic of interest.

Creating different samples from the accessible data and working out the statistic of interest for each sample decreases the impact of sampling error. It gives a more accurate estimate of the sampling distribution.

5. Confidence Intervals

It gives a more accurate estimate of the confidence intervals of the statistic of interest.

Assessing the statistic's sampling distribution can give a more accurate estimate of the confidence intervals, which are basic for making statistical inferences.

In synopsis, it offers a few benefits over traditional statistical techniques, including non-parametric, distribution-free, valuable for small sample sizes, accuracy, and more accurate confidence intervals.

NOTE

These benefits make it a strong and adaptable method for specialists and professionals looking to acquire further experience in their data and settle on additional educated choices.

Disadvantages of Bootstrapping

While it offers a few benefits, it has its limits. In this segment, we will discuss some of its critical drawbacks.

1. Computational Intensity

One of the greatest restrictions of this is its computational intensity. This requires creating numerous resamples from the accessible data and computing the statistic of interest for each resample.

This can be a tedious and computationally intensive cycle, especially while managing enormous datasets.

2. Bias

This can present bias in the estimate of the statistic of interest.

The resampling system can bring about samples not delegated to the population, especially when the sample size is small or the underlying distribution needs to be more balanced. This can prompt biased estimates of the statistic of interest.

3. Dependence

This assumes that the observations in the dataset are autonomous and indistinguishably conveyed.

Be that as it may, practically speaking, the observations in the dataset might be reliant upon one another, especially in time series or spatial analysis.

In such cases, it may not give accurate estimates of the sampling distribution of the statistic of interest.

4. Sampling Variability

It produces various resamples from the accessible data; however, each resample is subject to sampling variability. This implies that the estimates of the statistic of interest can shift between resamples, prompting expanded vulnerability in the estimates.

5. Assumption of Random Sampling

It assumes that the first sample was obtained through random sampling. Nonetheless, the sampling might not have been random or biased, prompting inaccurate estimates of the statistic of interest.

In rundown, it has a few limits, including computational intensity, likely bias, dependence, sampling variability, and assumptions of random sampling.

These limits should be painstakingly considered while utilizing this as a statistical procedure, especially while managing enormous datasets or non-randomly sampled data.

Bootstrapping FAQs

It is a statistical procedure that includes resampling data with replacement to estimate the sampling distribution of a statistic of interest.

It works by creating numerous resamples from the accessible data and computing the statistic of interest for each resample.

By rehashing this cycle commonly, it estimates the sampling distribution of the statistic of interest, which can be utilized to compute confidence intervals or lead hypothesis tests.

It is a strong procedure that can be utilized in many applications, including hypothesis testing, model selection, and estimation of confidence intervals. It is especially helpful when the sample size is small, or the underlying distribution is unknown.

Potential bias can be relieved by utilizing bias-adjusted or sped-up bootstrapping strategies. These strategies change the bootstrap distribution to represent expected bias in estimating the statistic of interest, prompting more accurate outcomes.

It is essential to painstakingly consider the potential for bias and pick an appropriate strategy when utilizing this method.

It can be utilized with many data types, including nonstop, discrete, double, and clear-cut data. Nonetheless, it is critical to consider the data's idea and the statistical model assumptions when utilizing bootstrapping.

For instance, it may not be appropriate for data with stable conditions or non-random sampling.

It can be executed utilizing an assortment of programming packages and programming dialects, including R, Python, SAS, and Stata.

While some programming abilities might be expected to carry out bootstrapping in these conditions, there are likewise easy-to-use programming packages and devices accessible that can make this open to a more extensive scope of specialists and professionals.

or Want to Sign up with your social account?